| TL;DR - Key takeaways:

And most importantly: don’t wait for perfection. In AI-driven crises, early clarity beats perfect messaging. |

Artificial intelligence is now part of the media ecosystem whether we like it or not.

Search engines summarise stories before readers click through. Chatbots answer questions about your company using whatever information they can find. And generative tools produce financial briefings, draft social posts, and pull “key facts” from your newsroom in seconds.

And that’s great - convenience is VERY convenient. But it also creates a new layer of risk.

If AI pulls outdated data, blends your narrative with a competitor’s, fabricates a quote, or amplifies a deepfake, the correction cycle is different from traditional media errors. There is no single journalist to call. No clear publication to request a correction from. You are dealing with distributed outputs across platforms that learn exponentially from what is already online.

A 2024 survey on generative AI in PR found that roughly 75 percent of professionals using AI worry that newer practitioners may rely too heavily on automated tools and lose core communications judgment. The same research showed only about one in five agencies consistently disclose their use of AI to clients, which points to a growing transparency gap.

In other words, the industry is still working out how to use these tools responsibly. Meanwhile, they are already shaping public perception.

So the question is not whether AI will affect your crisis response. It will. The question is whether you have a playbook for when it goes wrong.

The role of AI in public relations

AI in public relations is here. It’s not going anywhere.

Teams are already using AI for:

- Drafting and refining press releases

- Media monitoring and sentiment analysis

- Visual generation

- Coverage summaries

- Risk scanning

But the bigger (and more turbulent) shift is external.

AI systems now:

- Generate summaries in search results

- Provide instant answers about your company

- Aggregate commentary across multiple sources

- Surface “background information” for journalists

- Repackage online narratives into authoritative-sounding responses

That last point is important.

If AI systems draw on fake, unreliable or outdated information, they can produce outputs that sound confident but are incorrect. That risk has been repeatedly flagged in industry discussions about generative AI and communications.

This means your owned media is now your most important source material, not just a distribution channel.

And during a crisis, timing matters. If AI generates summaries before your official statement goes live, it can lock in a narrative before you have framed it yourself.

Which brings us to the scenarios you need to plan for.

How to handle a PR crisis: AI edition

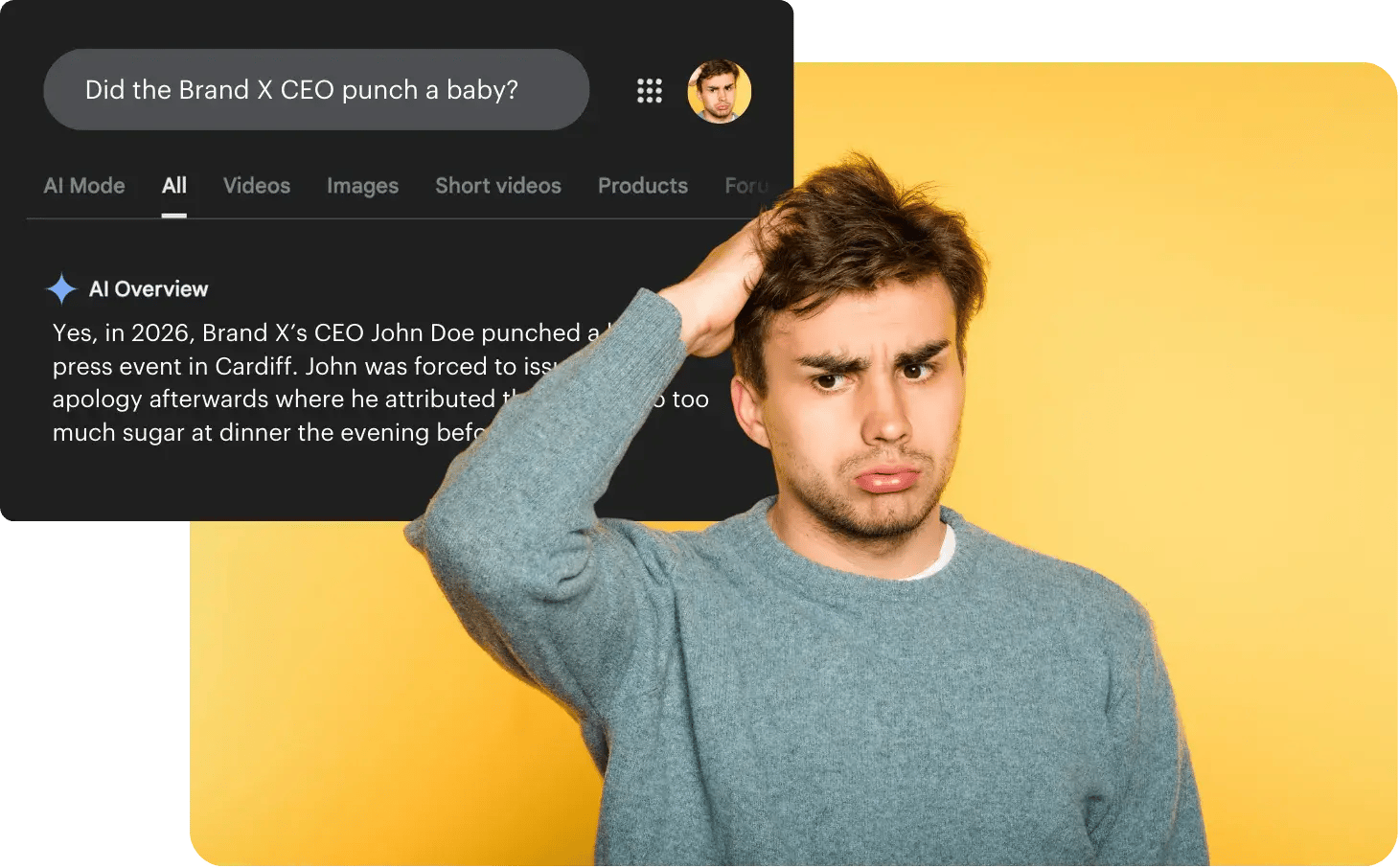

1. AI cites incorrect information about your brand

This is one of the most common issues.

A chatbot states that your company was involved in a controversy that never happened. A search AI pulls a statistic from a ten-year-old blog post. An executive profile contains outdated role information.

It may seem minor. It’s not.

AI outputs often get screenshotted and shared internally or externally. They influence journalists, investors, candidates, and partners.

What to do

- Audit the source trail.

Identify where the incorrect information is coming from. Is it an outdated press release? A third-party article? A forum thread? - Correct the primary source first.

AI systems learn from what is published. Update or remove inaccurate content at the source. Publish a clearly dated correction if needed. - Strengthen your authoritative content.

Publish a clear, fact-based page or statement that directly addresses the misinformation. Make it structured, factual, and easy to scan. - Document the issue internally.

Track when it appeared, where, and how it was resolved. This is now part of your crisis logging. - Escalate if it materially affects business.

In some cases, formal platform feedback or legal review may be necessary.

What NOT to do

- Do not dismiss it because “it’s just AI.”

- Do not correct it quietly without updating visible content.

- Do not assume the issue will disappear on its own.

If the wrong information is online, AI will likely reuse it. And this can create a snowball effect. One model cites it, another picks it up, and suddenly, outdated facts start showing up everywhere.

2. An AI attributes a competitor’s narrative to your brand

This is more subtle, and more difficult to deal with.

An AI summary blends your company with a competitor’s positioning. It assigns their sustainability claims to you. It mixes up product lines. It merges reputational histories.

This usually happens when companies operate in similar categories and share keywords.

What to do

- Clarify your positioning publicly.

Strengthen your own narrative pages. Make the differences between you and your competitors explicit. - Use structured content.

FAQs, fact sheets, executive bios, and newsroom posts should clearly define your company’s activities and scope. - Review metadata and tagging.

Ambiguous titles and headlines increase confusion. Refer back to your public position and make sure there is a clear throughline. - Brief leadership.

This may surface in investor conversations or analyst calls. Prepare talking points. - Monitor recurrence.

If AI systems repeatedly blend narratives, you may need a broader content hygiene strategy, OR you may need to redefine your brand positioning to be more unique.

What NOT to do

- Do not attack the competitor publicly.

- Do not issue defensive statements that amplify confusion.

- Do not ignore minor misattributions if they repeat.

Repetition hardens perception.

3. Your executive is caught up in a deepfake scandal

Deepfakes are no longer theoretical.

In 2024, several companies reported incidents where fraudsters used AI-generated voice or video to impersonate executives in financial scams. Political deepfakes also surged globally, especially around elections. And this isn’t fringe anymore: according to Jumio’s Global Identity Survey 2024, 60% of consumers encountered a deepfake video in the past year alone.

We see this play out in the recent Netflix film G20, which turns deepfakes into a full-blown geopolitical action spectacle. It’s wildly over the top (and not exactly ‘good’), but uncomfortably close to the impersonation scams comms teams are already dealing with in real life.

If a manipulated video of your CEO appears online, making inflammatory remarks, you do not have the luxury of a slow response.

What to do

- Verify immediately.

Confirm internally that the content is fake. Document evidence. - Respond quickly and clearly.

Issue a factual statement: the content is manipulated. Avoid speculation. - Use your owned channels.

Publish the response prominently in your newsroom and on corporate social channels. - Coordinate legal and security teams.

Deepfakes can overlap with fraud or market manipulation. - Prepare media Q&A.

Journalists will ask how you know it is fake and what steps you are taking. - Consider forensic validation.

Independent technical verification strengthens credibility.

What NOT to do

- Do not stay silent, hoping things die down.

- Do not over-explain technical details in your first statement.

- Do not frame it emotionally. Stick to facts.

Clarity stabilises the situation.

4. AI generates summaries before your first statement goes live during a crisis

This is the scenario most teams underestimate.

A crisis breaks. Social chatter accelerates. Search AI produces a summary based on early reporting or speculation. Your official statement is still in draft.

The narrative gap is filled without you.

What to do

- Shorten internal approval cycles.

Pre-align leadership on crisis templates in advance. - Publish holding statements early.

Even a brief acknowledgement gives AI systems a factual anchor. - Timestamp clearly.

Make updates visible and chronological. - Centralise updates.

Use one authoritative page that can be updated in real time. - Monitor AI outputs.

During active crises, search and query your company across AI tools to see how the story is being summarised.

What NOT to do

- Do not wait for the “perfect” statement.

- Do not fragment updates across disconnected channels.

- Do not assume media coverage alone will shape the narrative.

Speed now influences machine interpretation.

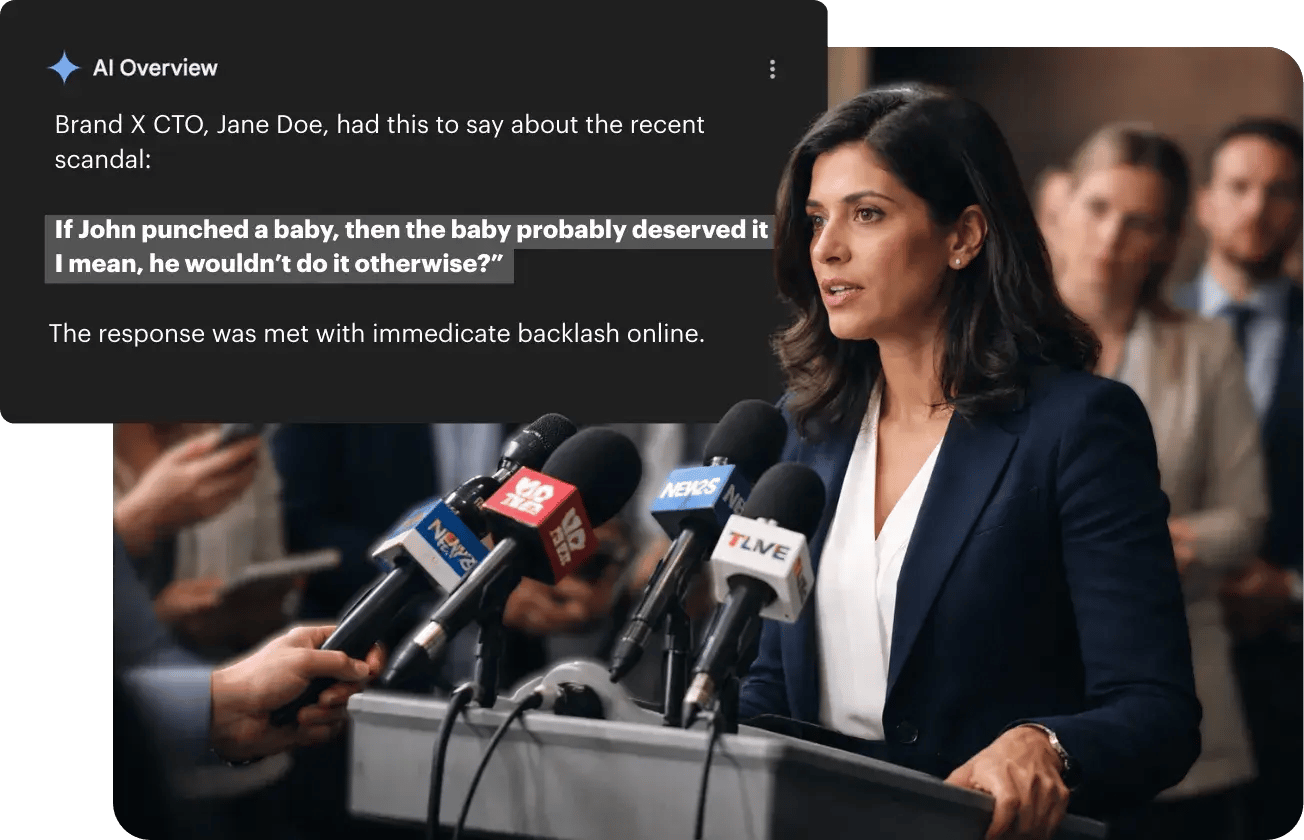

5. A fake executive quote that was never said goes viral

Fabricated quotes spread quickly, especially if they confirm existing biases.

AI tools can generate plausible executive statements that look real. Screenshots circulate. Context disappears.

What to do

- Publicly correct with precision.

Quote the fabricated line directly and state clearly that it was never said. - Provide verifiable alternatives.

Link to official transcripts, interviews, or newsroom posts. - Leverage executive visibility

A short, controlled video statement can neutralise doubt. - Brief internal teams.

Sales and HR may receive questions before comms does. - Document and escalate if necessary.

Legal review may be appropriate if the quote affects market perception.

What NOT to do

- Do not issue vague denials.

- Do not attack individuals sharing the quote.

- Do not let it circulate internally without response.

Silence allows fiction to become fact.

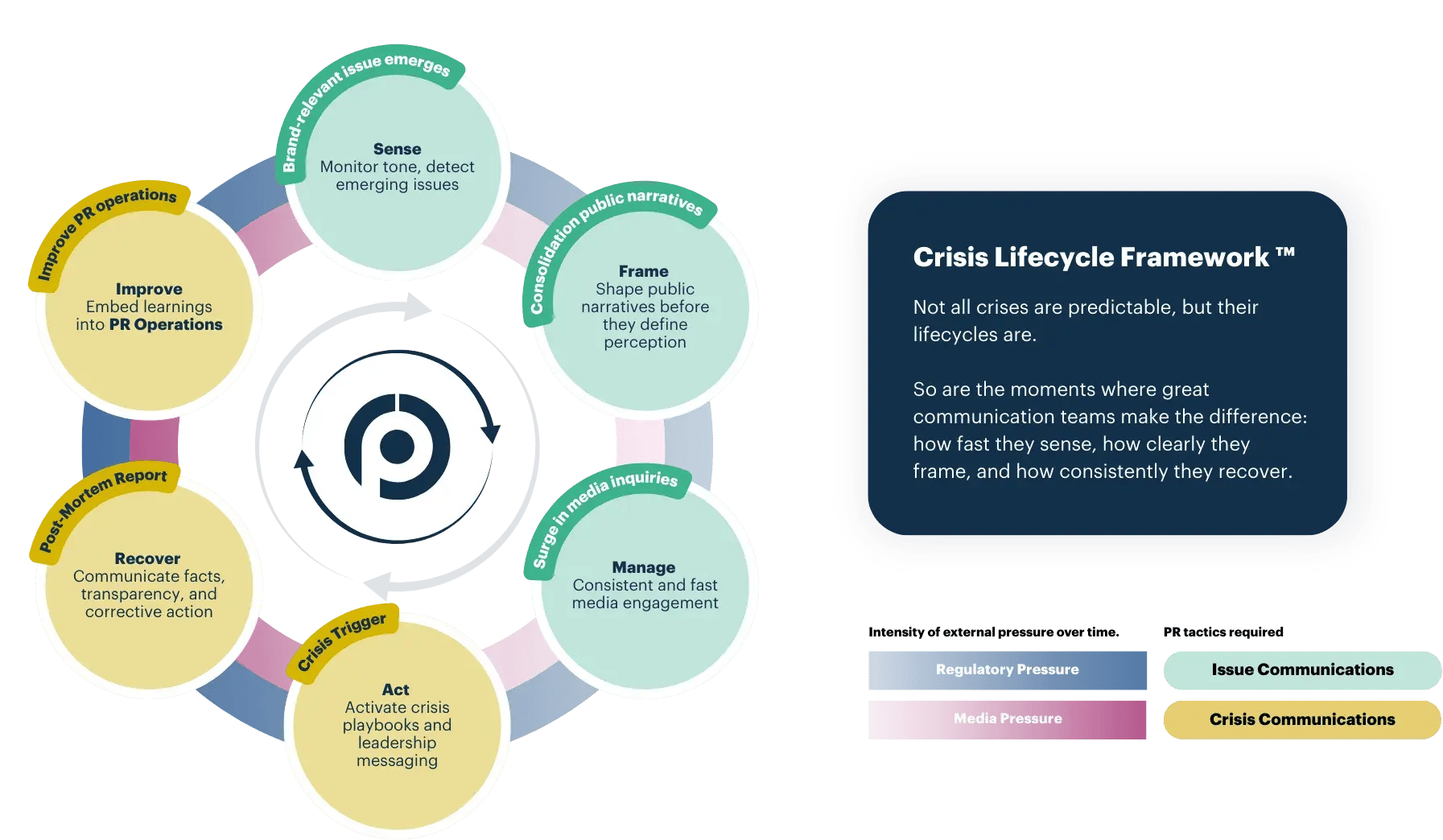

How to use Presspage’s Crisis Lifecycle Framework IRL

Frameworks only help if they translate into action.

Presspage’s Crisis Lifecycle Framework breaks response into six stages:

Sense → Frame → Manage → Act → Recover → Improve

The framework is simple on purpose. It gives teams a shared language when things move fast.

Here’s how that actually applies to the scenarios you’ve just read.

1. Sense

This is your early-warning system. In an AI context, “sense” means monitoring more than just news coverage.

You should be actively checking:

- Search summaries

- AI chatbot answers about your brand

- Social screenshots of AI outputs

- Executive impersonation attempts

- Sudden spikes in inbound questions from sales or HR

Practical move:

Add AI platforms to your regular crisis monitoring routine. If you're only keeping tabs on traditional media, you’re already late.

2. Frame

This is where most teams fall behind. Framing means publishing your version of events before speculation solidifies.

We’re not talking about a perfect statement, but a factual anchor.

That can be:

- A short newsroom post acknowledging the issue

- A timestamped holding statement

- A basic FAQ page addressing what’s known and unknown

Practical move:

Create pre-approved crisis templates now. The middle of a live incident is not the moment to debate commas with legal.

3. Manage

This is simple internal coordination. You’re getting comms, legal, security, leadership, HR, and customer-facing teams on the same page.

That includes:

- Centralising updates in one newsroom location

- Briefing executives with consistent talking points

- Making sure sales and recruiters know what’s happening

- Logging every development

Practical move:

Treat your newsroom as the single source of truth. Everything else should point back to it.

4. Act

Now we take things externally. This includes:

- Correcting misinformation publicly

- Responding to journalists

- Updating stakeholders

- Publishing verified evidence when needed

Practical move:

When correcting AI errors, quote the false claim directly and replace it with verified facts. Ambiguous denials don’t travel as far as specific corrections.

5. Recover

Once the immediate noise settles, it’s time to assess damage.

Look at:

- Media tone

- Stakeholder trust

- Search and AI summaries post-crisis

- Internal feedback

Practical move:

Run a post-crisis audit on your owned content. What gaps allowed misinformation to spread?

6. Improve

This is where resilience is built.

Update:

- Crisis playbooks

- Approval workflows

- Executive media training

- Content hygiene practices

Practical move:

Turn every AI incident into a checklist update. Today’s edge case becomes tomorrow’s standard scenario.

FAQ on AI crisis management

What is AI crisis management?

AI crisis management is the practice of handling misinformation, impersonation, fabricated content, and automated summaries generated by AI systems during a reputational incident.

It extends traditional crisis comms by accounting for machine-generated narratives.

How is an AI-driven PR crisis different from a traditional one?

There’s often no single publisher to contact, misinformation spreads faster, and AI systems may continue repeating errors even after corrections are issued.

Speed and owned media become much more important.

How do you stop AI from spreading incorrect information about your company?

You start by correcting the original source, then publishing clear, structured content on your own channels. AI tools learn from what’s already online.

You can’t control every output, but you can influence what machines learn from.

What should you do first when a deepfake appears?

Verify internally, publish a factual statement quickly, and centralise updates in your brand newsroom.

Avoid emotional responses. Stick to evidence.

Can owned media really influence AI narratives?

Yes. Newsrooms, executive bios, FAQs, and official statements are primary source material for many AI systems.

If your owned content is outdated or fragmented, AI fills in the gaps.

Final Thoughts

AI hasn’t (and won’t) replaced the role PR plays, but it has raised the bar.

Your newsroom now feeds machines as much as journalists, and your response speed shapes automated summaries. Structure is now very influential when it comes to how narratives are interpreted.

The fundamentals still apply, though: clarity, consistency, credibility…on steroids!

Want to pressure-test your crisis readiness?

We’re hosting a live Crisis Simulator webinar where you’ll walk through a real-time AI-driven crisis scenario and make decisions as events unfold. It’s practical, slightly uncomfortable (in a good way), and designed for comms teams dealing with exactly the situations described in this article.

👉 Join the Crisis Simulator: How to manage a deepfake scandal in real time

![What is crisis communication? [and how you can always be prepared for the unexpected]](https://presspage.com/hs-fs/hubfs/What%20is%20crisis%20communication%20-%20feature%20v2.jpg?width=520&height=294&name=What%20is%20crisis%20communication%20-%20feature%20v2.jpg)